30.08.2017 – IBM Power8 Platform for Cognitive Computing and Deep Learning

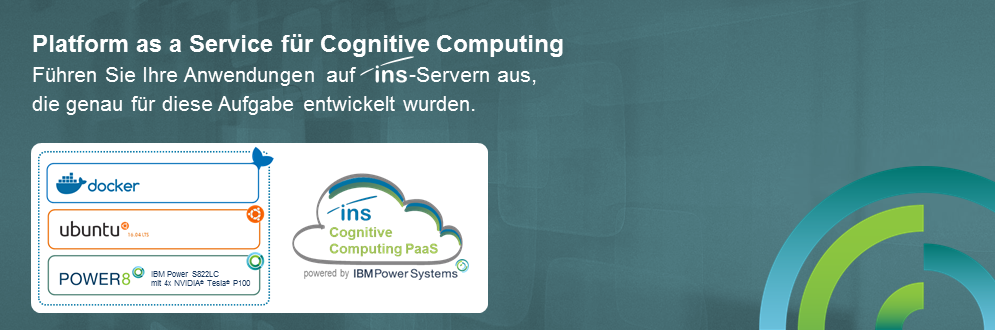

Cognitive systems are defined as a technology which answers questions through the profound processing and understanding of natural language. The system develops hypotheses and formulates possible answers utilizing the available notes. Moreover, it can be trained through the analysis of large amounts of data. Furthermore, the system is able to learn from its mistakes and failures. Hitherto, fast vector units based on INTEL x86 computers in combination with PCI Express cards and fast graphics processors (GPUs) have been necessary in order to use technology like this. These systems however, can only be as fast as their PCI Express bus – in other words, the installed GPUs are not used ideally. Since this year, IBM is introducing a new technology which improves this significantly. INS is now offering this technology as an attractive Platform-as-a-Service model (PaaS) over any desired period of time out of the German cloud.

Presently, systems that combine the IBM Power8 processors and the Nvidia Tesla100 GPUs (IBM Power 822LC), which are connected via an NVLink interface, are currently the fastest and most accurate method to calculate cognitive computing tasks. Hence, we have committed ourselves at present to expand our managed service portfolio to include the latest technological use of the Nvidia Tesla GPUs. We consider this technology as a seminal technology, and as a result, we will offer these systems from September 2017 onwards as part of our Managed Services in various forms within a transparent PaaS model.

We provide all interested customers with a platform that is based on these systems and made available via the cloud. Thus, allowing you to calculate your scenarios directly on our IBM Power 822LC systems and evaluate them yourself. Therefore evaluating how fast and effective these systems can be in practice and apart from any theoretical benchmarks. The advantage of this model is the absence of capital-binding investments (CAPEX) during test scenarios. Our Managed Service approach offers the user the possibility to utilize a powerful Cognitive Computin6g platform purely on the basis of operational costs (OPEX). Consequently, you do not need to build and establish your own operating team that takes care of this special system.

Our container technology, which is based on Ubuntu (16.04 LTS) and Docker, offers you the opportunity to build up your unique scenario by using the desired containers (images) on our platform.

What’s more, the operation of the infrastructure and the administration of the complete system may also be expanded to include extended scenarios as far as the full operation of the systems including backup, archiving and disaster recovery scenarios.

Through the application of the latest cloud technology, you free up resources which can be utilized to take care of projects, applications and your data. In other words, the infrastructure is “simply there”. Of course, we offer this service in a variety of scenarios as an on-premise solution, whether it is out of your data center or on your campus.

In order to give you an idea of the superiority achieved through the combination of IBM Power with a NVLink and the NVidia Tesla P100, we will provide you with a benchmark (NVIDIA CUDA Example Bandwidth Test) in regards to the expected transfer performance:

fig. : Host <-> Device über NVLink

The same transfer utilizing a PCI-E on a x86 computer:

fig.: Host <-> Device über PCI-Expresst

The value of the “Device to Device” bandwidth refers to the performance between 2 local GPUs on the system. If you look at the values, it can easily be observed that the transfer performance between the CPU and the GPU of the NVLink technology is superior by a factor of 3.

Thus, this leads to unprecedented speed in regards to the execution of projects. For further and detailed information on this benchmark, see the following website: https://www.microway.com/hpc-tech-tips/comparing-nvlink-vs-pci-e-nvidia-tesla-p100-gpus-openpower-servers/

The publication of the benchmark data is provided with the kind permission of Microway, Inc., Plymouth, MA.